(moved all comments to links) |

No edit summary |

||

| (9 intermediate revisions by 2 users not shown) | |||

| Line 1: | Line 1: | ||

<div class="metadata"> | <div class="metadata"> | ||

<span id="Pierre - Shaping vectors"></span> | |||

==Shaping Vectors== | ==Shaping Vectors== | ||

'''Pierre''' | '''Pierre Depaz''' | ||

</div> | </div> | ||

A vector is a mathematical entity which consists in a series of numbers grouped together to represent another entity. Often, vectors are associated with spatial operations: the entities they represent can be either a point, or a direction. In computer science, vectors are used to represent entities known as features, measurable properties of an object (for instance, a human can be said to have features such as age, height, skin pigmentation, credit score and political leaning). Today, [[Pierre-comment-02|such representations]] are at the core of contemporary machine learning models, allowing a new kind of ''translation'' between the world and the computer. | |||

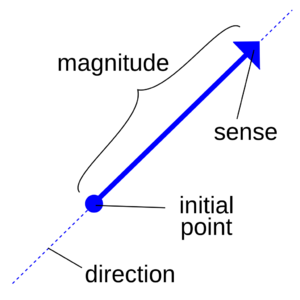

[[File:Vector-magnitude-direction.png|alt=Description of the different components of a vector|thumb|A vector is a very spatial thing.]] | |||

This essay sketches out some of the implications of using vectors as a way to represent non-computational entities in computational terms, like other visual mnemotechnics did in the past, by suggesting epistemological consequences in choosing a particular syntactic system over another. On one side, binary encoding allows a translation between physical phenomena and concepts, between electricity and numbers, enabling [[Pierre-comment-03|the implementation of symbolic logic]] in a formal and mechanical way (highlighting that truth really only is nothing more than a mathematical concept). On the other side, vectors suggest new perspectives on at least two levels: their relativity in storing (encoding) content and their locality in retrieving (decoding) content. | |||

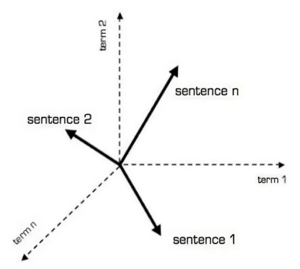

[[File:Vector space model-transformed.png|alt=A representation of vector space for linguistics.|thumb|A vector space is how sentences fall along the intensity of words.]] | |||

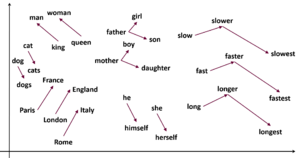

[[File:Word2vec2.png|alt=A 2-dimensional representation of word2vec.|thumb|In this word space, notice how the concept of "parent" or "capital" is implicitly spatialized.]] | |||

In machine learning, a vector represents the current values of the property of a given object, e.g. a human would have a value of 0 for the property "melting point", while water would have a value of non-0 for the property "melting point". | |||

[ | Vectors are thus [[Pierre-comment-04|always containing the potential features of the whole space]] in which they exist, and are more or less relatively tightly defined in terms of each other (as opposed to, say, alphabetical or cardinal ordering). [[Pierre-comment-05|The proximity, or distance, of vectors]] to each other is therefore essential to how we can use them to make sense. [[Pierre-comment-01|The meaning]] is therefore no longer created through logical combinations, but by spatial proximity in a specific semantic space. [[Pierre-comment-08|Truth]] [[Pierre-comment-06|moves]] from (binary) exactitude to (vector) [[Pierre-comment-07|approximation]]. | ||

[ communities of perceiving | As we retrieve information stored in vectors, we therefore navigate semantic spaces. However, such a retrieval of information is only useful if it is meaningful to us; and in order to be meaningful, it navigates across vectors that are in close proximity to each other, focusing on [[Pierre-comment-09|re-configurable]], (hyper-)local coherence to suggest meaningful structuring of content. | ||

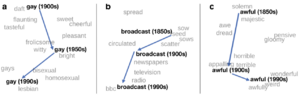

[[File:Semantic change.png|alt=A graph showing the evolution of three vector spaces over decades.|thumb|The company words keep changes over time.]] | |||

Given those the existence of features in relation to one another, and the construction of meaning through the proximity of vectors, we can see how semantic space is both malleable in storing meaning, structural in retrieving meaning, prompting questions of literacy. A next interesting inquiry is to think about the process through which [[Pierre-comment-11|this semantic space]] is being shaped, through the process of [[Pierre-comment-12|corporate]] [[Pierre-comment-13|training]], and, in turn, shape us into specific '''communities of perceiving'''. | |||

<noinclude> | |||

[[Category:Content form]] | [[Category:Content form]] | ||

</noinclude> | |||

Latest revision as of 11:18, 7 February 2024

A vector is a mathematical entity which consists in a series of numbers grouped together to represent another entity. Often, vectors are associated with spatial operations: the entities they represent can be either a point, or a direction. In computer science, vectors are used to represent entities known as features, measurable properties of an object (for instance, a human can be said to have features such as age, height, skin pigmentation, credit score and political leaning). Today, such representations are at the core of contemporary machine learning models, allowing a new kind of translation between the world and the computer.

This essay sketches out some of the implications of using vectors as a way to represent non-computational entities in computational terms, like other visual mnemotechnics did in the past, by suggesting epistemological consequences in choosing a particular syntactic system over another. On one side, binary encoding allows a translation between physical phenomena and concepts, between electricity and numbers, enabling the implementation of symbolic logic in a formal and mechanical way (highlighting that truth really only is nothing more than a mathematical concept). On the other side, vectors suggest new perspectives on at least two levels: their relativity in storing (encoding) content and their locality in retrieving (decoding) content.

In machine learning, a vector represents the current values of the property of a given object, e.g. a human would have a value of 0 for the property "melting point", while water would have a value of non-0 for the property "melting point".

Vectors are thus always containing the potential features of the whole space in which they exist, and are more or less relatively tightly defined in terms of each other (as opposed to, say, alphabetical or cardinal ordering). The proximity, or distance, of vectors to each other is therefore essential to how we can use them to make sense. The meaning is therefore no longer created through logical combinations, but by spatial proximity in a specific semantic space. Truth moves from (binary) exactitude to (vector) approximation.

As we retrieve information stored in vectors, we therefore navigate semantic spaces. However, such a retrieval of information is only useful if it is meaningful to us; and in order to be meaningful, it navigates across vectors that are in close proximity to each other, focusing on re-configurable, (hyper-)local coherence to suggest meaningful structuring of content.

Given those the existence of features in relation to one another, and the construction of meaning through the proximity of vectors, we can see how semantic space is both malleable in storing meaning, structural in retrieving meaning, prompting questions of literacy. A next interesting inquiry is to think about the process through which this semantic space is being shaped, through the process of corporate training, and, in turn, shape us into specific communities of perceiving.